The views shared in this opinion article are the author's own and do not necessarily reflect the views of SF Classical Voice or its employees.

Not long ago, music meant assembly. From sold-out concerts to highly anticipated album releases, fans enjoyed music as a collective experience. Even in the early days of streaming, for all its automation, platforms still placed listeners inside a common archive — endlessly rearranged but broadly shared.

But as advancements in technology make the listening experience startlingly personal, that sharedness is slipping.

Playlists increasingly mirror the listener. Where listeners once searched outward for musical discovery and human connection, they now craft their own custom playlists in an intentional process that has begun to resemble composition.

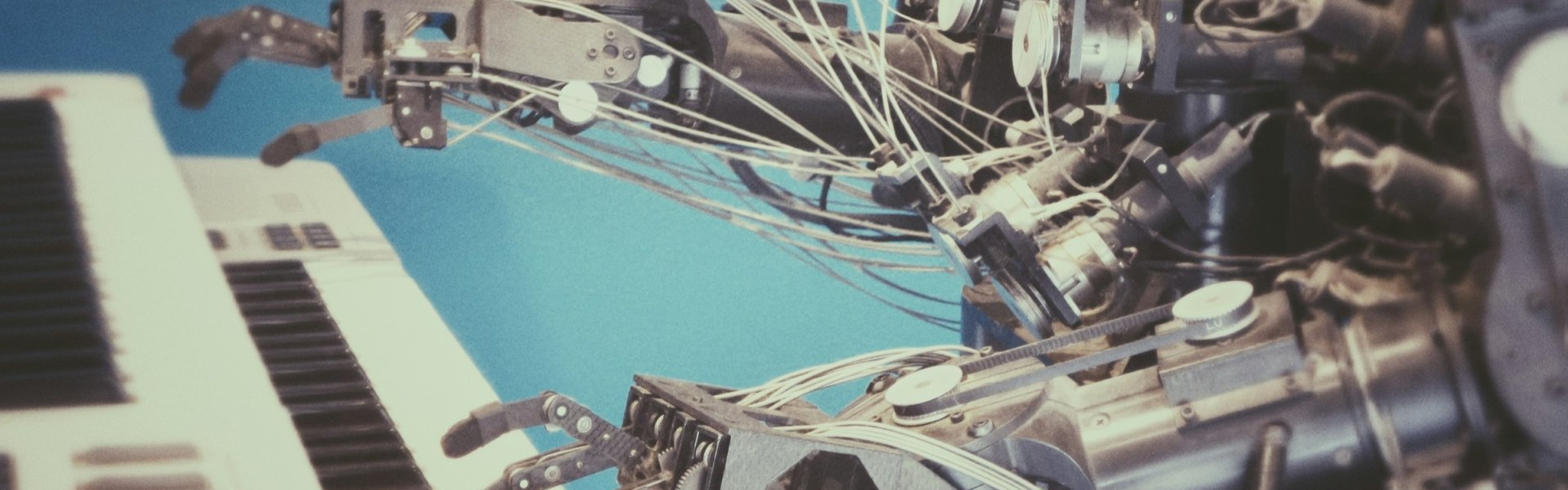

The rise of artificial intelligence in music builds upon this experience. Now, personalized songs can be generated by an individual, for the individual, on the spot with a simple prompt. To some, this shift feels empowering, and in many ways it is. People who once lacked access to instruments, training, or time can now create music that reflects their inner life at the click of a button. However, with such frictionless tools, the long-revered process of developing one’s personal voice suddenly becomes optional — not to mention the questionable ethics of sourcing from human artists without their overt consent.

Most AI music systems begin by studying past works, then smoothing them into new outputs that feel familiar. The results are often polished and pleasing — technically impressive and immediately usable — but they also tend to feel flat.

A subtle diminishment in quality occurs during this aggregative process. The edges of a score or performance grow dull. The half-steps that make a melody ache, the drummer who leans a bit behind the beat, the glorious risks and mistakes that instigate musical discovery, are left behind. AIp Precision isn’t the enemy, but AI music does lack variety.

Every musical breakthrough in history arrived because someone broke what had previously worked: a guitar was distorted, an echo left uncorrected, a machine used against its own instructions. Innovation relies on happy accidents. Deviation, not imitation, is where style is born.

As each listener disappears into a private resonance chamber, be it via self-curated streaming or creating music with AI tools, our culture’s shared tempo begins to drift. The dance floor — once a near-perfect metaphor for connection — grows quiet.

Perhaps that is why live performance is entering a renaissance, and lands so forcefully right now. After hours of solitary listening, experiencing the sheer physicality of sound — air flowing, breath syncing — with others who are moved by it can feel almost holy.

Although intelligent technology will appear in live performance spaces — through responsive visuals, generative accompaniments, and data-driven acoustical engineering — the stage will still run on human connection and perhaps counter the technology by moving in the opposite direction: rougher, more participatory, chaotic.

For performers, today’s technology could reframe their musicianship. The goal is no longer flawless execution, but presence: the ability to respond, adapt, and hold a room in real time. The question for musicians isn’t whether AI will enter the room. It’s which parts of the experience remain irreducibly human, and therefore worth gathering for.

AI tools haven’t stripped meaning out of music, they’ve multiplied it. The challenge, however, is to preserve what matters to us most: the urge to feel in sync with others and to communicate feeling through sound. Musicians may become guides rather than icons, designing experiences, redefining liveness, and reminding us that beauty still lies in imperfections.